Design an Agentic AI System That Autonomously Adapts to New Tasks | Anthropic AI PM Interview

Technical Product Questions for AI Product Managers: Follow step by step guide on how to answer agentic AI system design questions in a PM Interview - asked by Anthropic

You’re sitting across from your interviewer at a leading AI company (Anthropic, Open AI, Google). The behavioural questions went well. You aced the product strategy question. Then they lean forward and ask:

“Design an AI system that can autonomously learn and execute new tasks without human intervention.”

Your mind races:

“Autonomously?

Learn new tasks?

This isn’t traditional software...”

This is the future of PM interviews. (or, let me say it - AI PM interviews)

As AI agents become mainstream (GitHub Copilot Workspace, ChatGPT plugins, AutoGPT, Microsoft Copilot), product managers need to design systems that aren’t just predictive or generative - but agentic.

What makes this question different from traditional system design:

Not static software (it adapts and learns continuously)

Not traditional ML (not just prediction models)

Not just generative AI (not just creating content)

Requires thinking about autonomy, safety, and control

Tests understanding of emerging AI paradigm

For a deep-dive on “How to answer technical questions in PM Interview? ” - read here to tackle any Technical question.

Join our WhatsApp channel for quick PM insights and latest Job updates

What you’ll learn in this guide:

How to approach agentic AI system design questions

S.P.E.C.T.S. framework adapted for AI agents

Key components of autonomous AI architecture

Critical trade-offs: autonomy vs control, safety vs speed

Learning mechanisms: RAG, few-shot, fine-tuning

Safety and evaluation strategies

Why this matters:

2026-2027 is the year of AI agents or rather, this decade’s applications will be built on Agentic AI layer. Companies are shipping:

Coding agents (GitHub Copilot Workspace, Devin)

Customer service agents (Intercom AI Agent)

Research agents (Perplexity, Claude with tools)

Personal assistants (scheduling, email, tasks)

PMs who can design these systems will shape the next decade of software.

Check out more technical questions for PMs

Now, Let’s dive in and first, understand Agentic AI and how it is different.

Understanding Agentic AI: What Makes It Different

Before we apply the framework, let’s define what we’re actually building.

If you already know the fundamentals then skip this section and jump straight to “How to answer Agentic AI System Design question?”

What is an Agentic AI System?

An agentic AI system is software that can:

Perceive: Understand tasks and environment

Decide: Make autonomous decisions toward goals

Act: Take actions in the world (not just output predictions)

Learn: Improve from experience without explicit programming

Adapt: Handle new situations and tasks

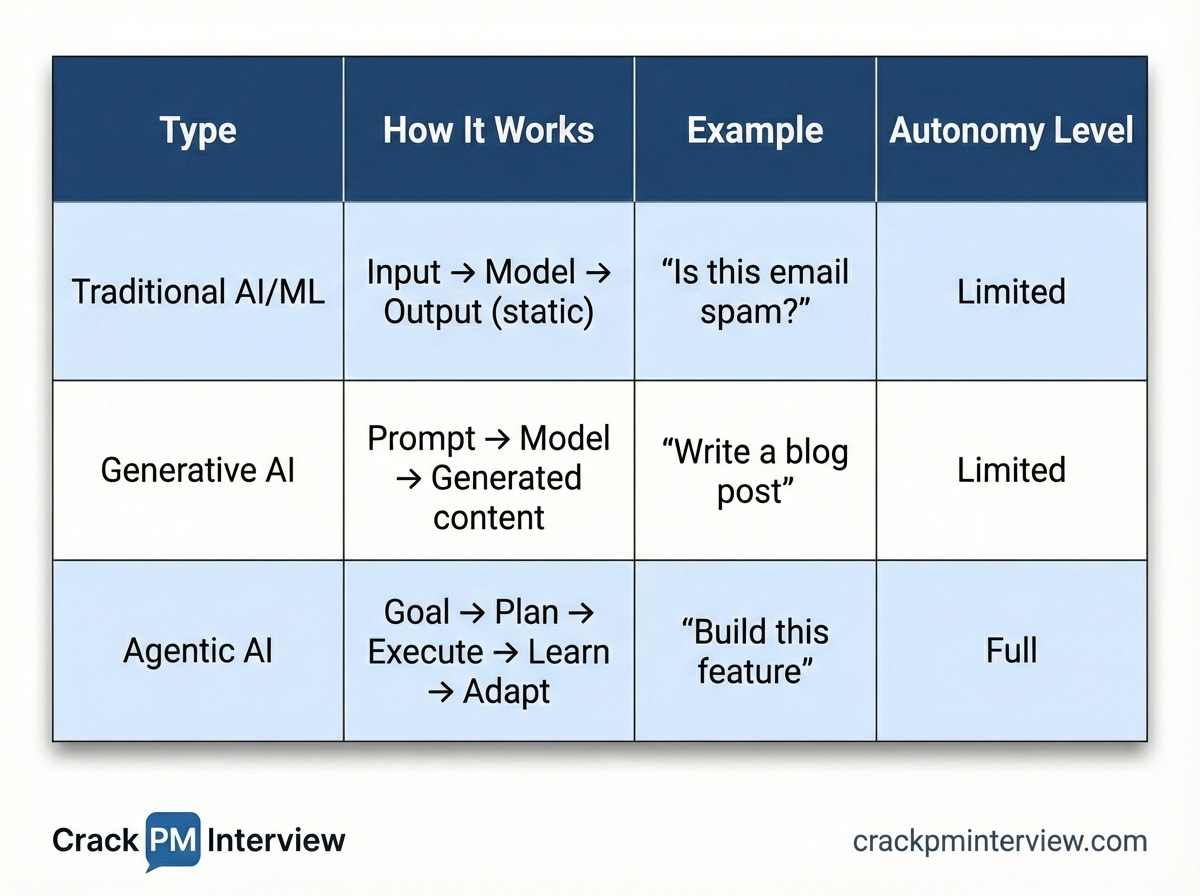

How is Agentic AI different from Tradition AI/ML and Generative AI?

The Five Key Characteristics of Agentic AI Systems:

Goal-Oriented - Works toward defined objectives, not just responding to prompts

Autonomous - Makes decisions without constant human input

Adaptive - Learns from experience and new situations

Action-Taking - Interacts with systems and environment

Self-Improving - Gets better through feedback loops

Real-World Examples:

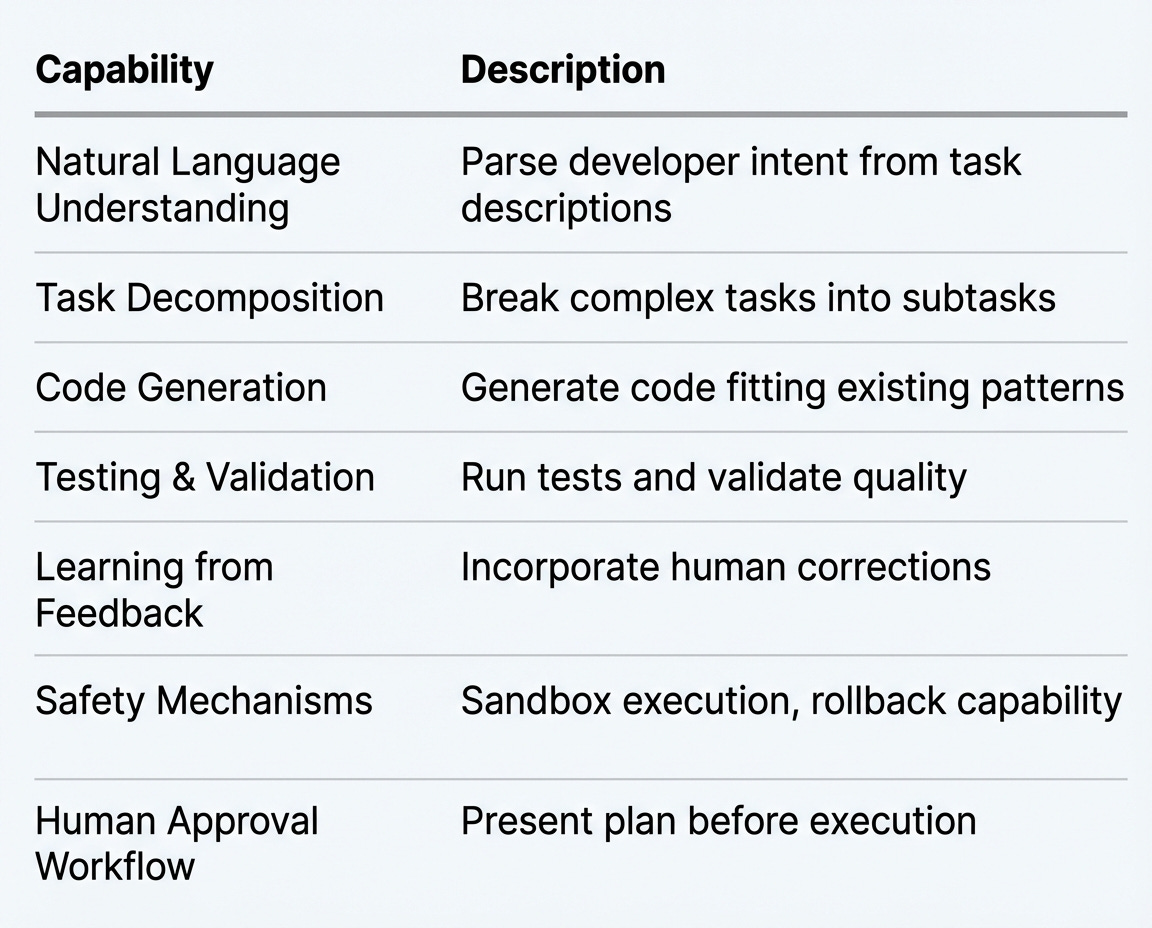

1) GitHub Copilot Workspace:

Understands issue description from natural language

Plans implementation across multiple files

Writes code that fits existing patterns

Runs tests and validates functionality

Creates pull request with documentation

Adapts to your codebase style over time

2) Customer Service AI Agents (Intercom, Zendesk):

Understands customer query intent

Searches knowledge base for relevant articles

Takes actions (processes refunds, updates orders, schedules callbacks)

Escalates complex issues to humans

Learns from successful resolutions to improve

3) Research Agents (Perplexity, Claude with tools):

Takes research question as input

Browses web, reads papers, analyzes sources

Synthesizes findings into coherent narrative

Cites sources and acknowledges uncertainty

Adapts search strategy based on what it finds

4) Coding Agents (Devin, Cursor):

Receives feature request or bug report

Analyzes codebase to understand architecture

Plans and implements solution

Debugs issues autonomously

Learns team coding conventions over time

The Key Difference: Action + Learning

Traditional AI systems are reactive - they respond to inputs with outputs.

Agentic AI systems are proactive - they pursue goals, take actions, and improve from experience.

This shift requires fundamentally different design thinking. You’re not designing a tool that users operate. You’re designing an autonomous collaborator that operates alongside users.

Why Interviewers Will Ask This in AI PM Interviews:

✅ Tests understanding of AI paradigm

✅ Evaluates ability to design with uncertainty

✅ Checks safety and control thinking

✅ Assesses product sense for AI products

Common Mistakes Candidates Might Make:

❌ Treating it like traditional ML (”train → deploy → done”)

❌ Ignoring safety mechanisms

❌ Over-promising capabilities

❌ Missing the feedback/learning loop

❌ Forgetting human oversight for MVP

Join our WhatsApp channel for quick PM insights and latest Job updates

Now, let’s dive in and answer this question.

How to Answer Agentic AI System Design Questions?

Here’s a proven repeatable framework that works perfectly well for any system design questions and, in fact, for any technical question thrown at you.

Use the below S.P.E.C.T.S. Framework:

S - Scope - Clarify the problem and context.

P - Product Requirements - Identify and prioritize functional requirements.

E - Engineering Constraints - Define non-functional requirements.

C - Components - Design high-level system architecture.

T - Trade-offs - Discuss alternatives and evolution path.

S - Success Metrics - Define validation, guardrails and connect everything to measurable outcomes.

let’s breakdown each step

Step 1: Scope - Clarify the Problem and Context

Goal: Define what we’re building before diving into architecture.

I) Ask Clarifying Questions

Task Domain:

“What types of tasks should the AI handle? Narrow domain or general purpose?”

“Specific vertical (coding, customer service, research) or horizontal?”

Autonomy Level:

“How autonomous should it be? Fully autonomous or human-in-the-loop?”

“Can it take actions without approval, or suggest and wait?”

Adaptation Scope:

“Adapt to new tasks in same domain or completely new domains?”

“Few-shot learning (needs examples) or zero-shot (just instructions)?”

Scale & Users:

“How many users? Single-tenant or multi-tenant?”

“Enterprise or consumer use case?”

Interviewer’s likely response:

“Focus on building an AI agent for software development teams. It should handle coding tasks (write code, debug, review). Start with human oversight - AI suggests and executes after approval. Design for 10K developers initially, scale to 100K. The AI should adapt to new coding frameworks within days using a few examples. Safety is critical - cannot deploy to production without explicit approval.”

II) Now, Restate the Problem

“So we’re building an AI coding agent for software development teams that can:

Execute coding tasks (implement features, debug, code review)

Adapt to new frameworks and patterns with 3-5 examples

Operate with human oversight (propose → approve → execute workflow)

Start with 10K developers, plan for 100K

Safety-first: production deployments require explicit approval”

III) State Assumptions and Non-Goals

Assumptions:

✅ Access to code repositories (GitHub, GitLab)

✅ Integration with developer tools (IDEs, CI/CD)

✅ Access to foundation LLMs (GPT-4, Claude)

Non-Goals:

❌ NOT replacing human developers (augmentation only)

❌ NOT handling infrastructure/DevOps initially

❌ NOT autonomous production deployment

IV) Define Success

“Success means the AI agent can take a coding task description, generate working code that passes tests, learn new frameworks from <5 examples, and reduce developer time by 30%.”

Step 2: Product Requirements - Define What to Build

Goal: Identify core functionality and prioritize ruthlessly before thinking about implementation.

I) User Segments

Primary:

Software developers (individual contributors)

Engineering managers (assigning tasks to AI)

Secondary:

QA engineers (validating AI output)

Tech leads (training AI on team patterns)

II) Core Use Cases

Use Case 1: Autonomous Task Execution

Developer describes task: “Add rate limiting to the API”

AI analyzes codebase and understands context

AI creates execution plan (subtasks breakdown)

AI implements changes across multiple files

AI runs tests and validates

Developer reviews and approves

AI commits and creates pull request

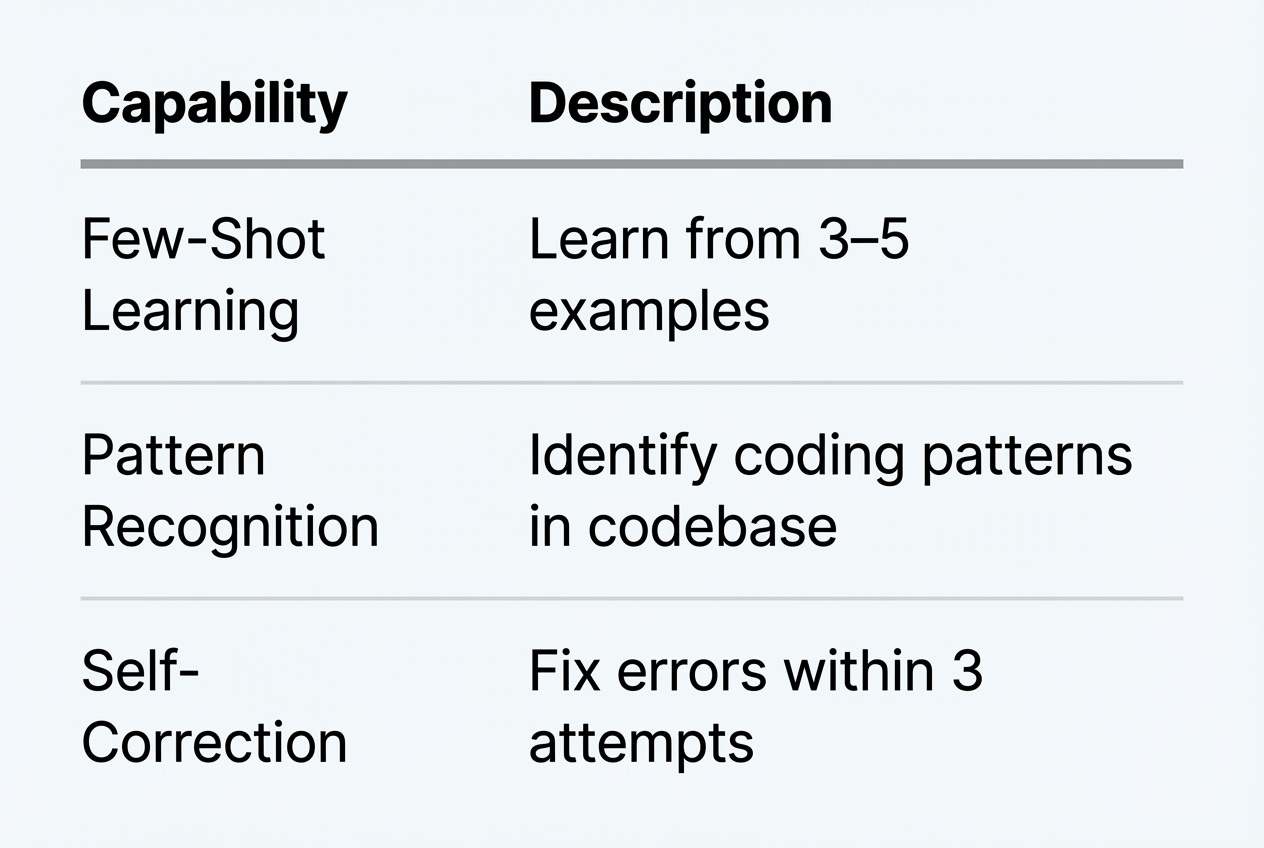

Use Case 2: Learning New Patterns

AI encounters unfamiliar framework

Developer provides 3-5 example implementations

AI extracts pattern and stores in memory

AI applies pattern to current and future tasks

Use Case 3: Self-Correction

AI generates code implementation

Automated tests fail

AI analyzes failure and hypothesizes fix

AI iterates (up to 3 attempts)

If still failing, escalates to human

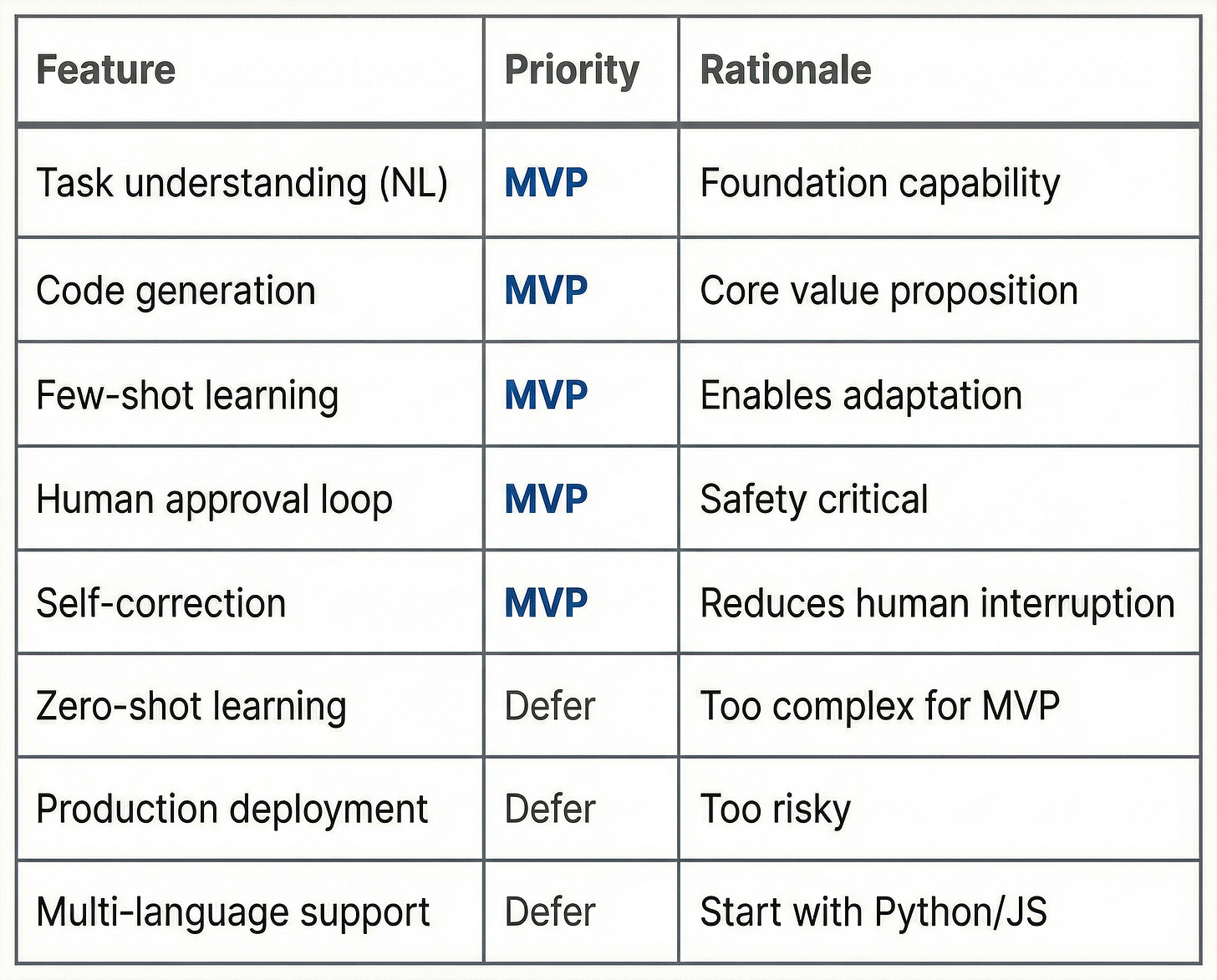

III) Functional Requirements - MVP

Core Capabilities (P0 - Must Have):

Adaptation Capabilities (P0 - Must Have):

IV) MVP vs Nice-to-Have Prioritization

Why this prioritization: We’re focusing on supervised autonomy - human oversight builds trust, learning from corrections improves the AI, and starting narrow ensures quality.

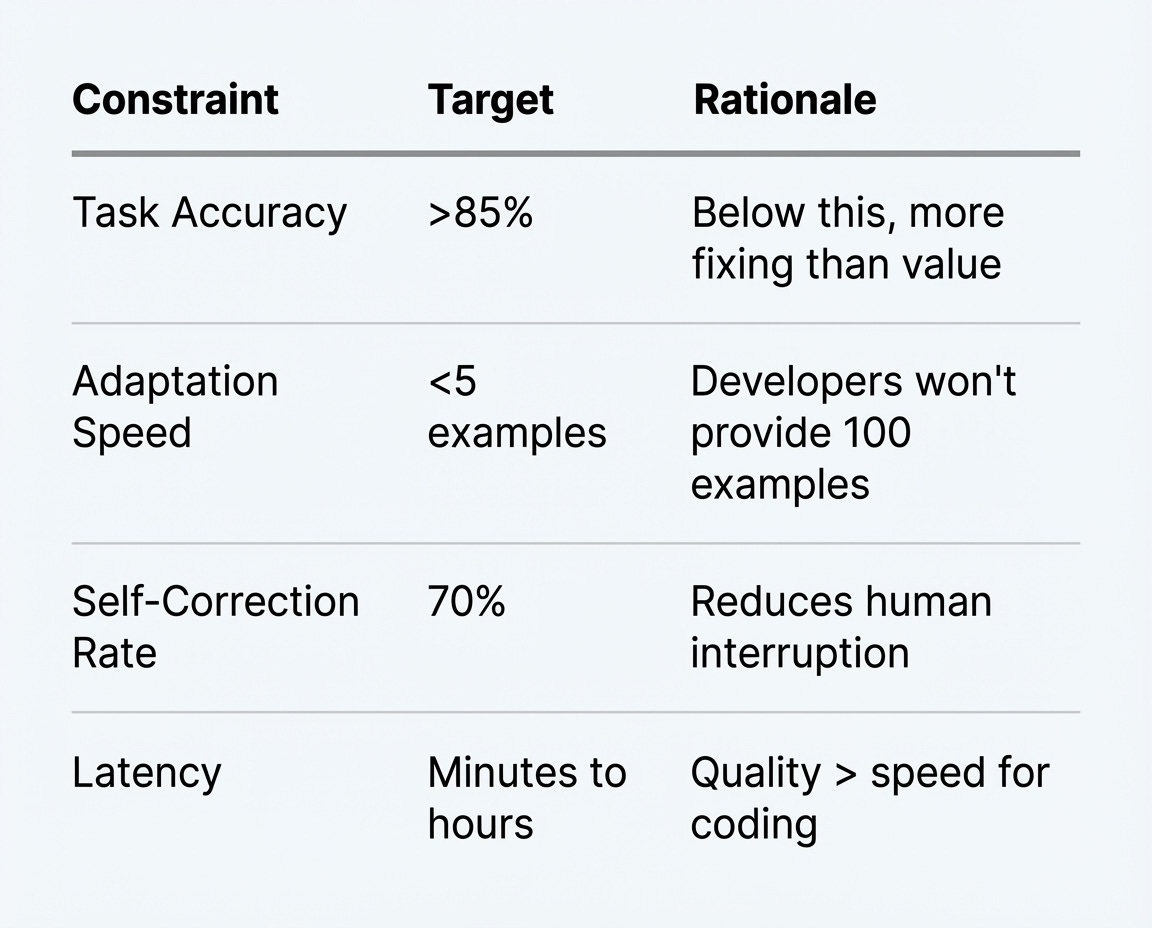

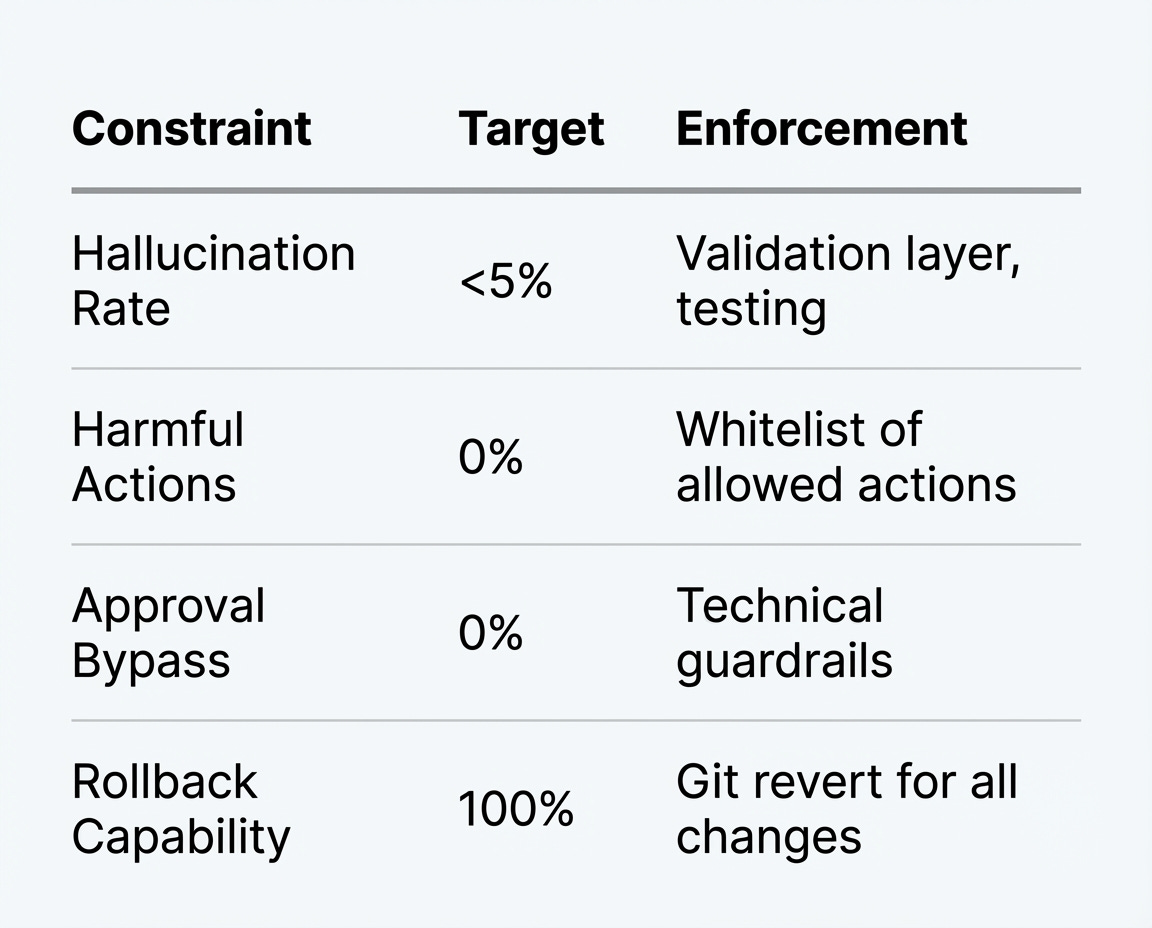

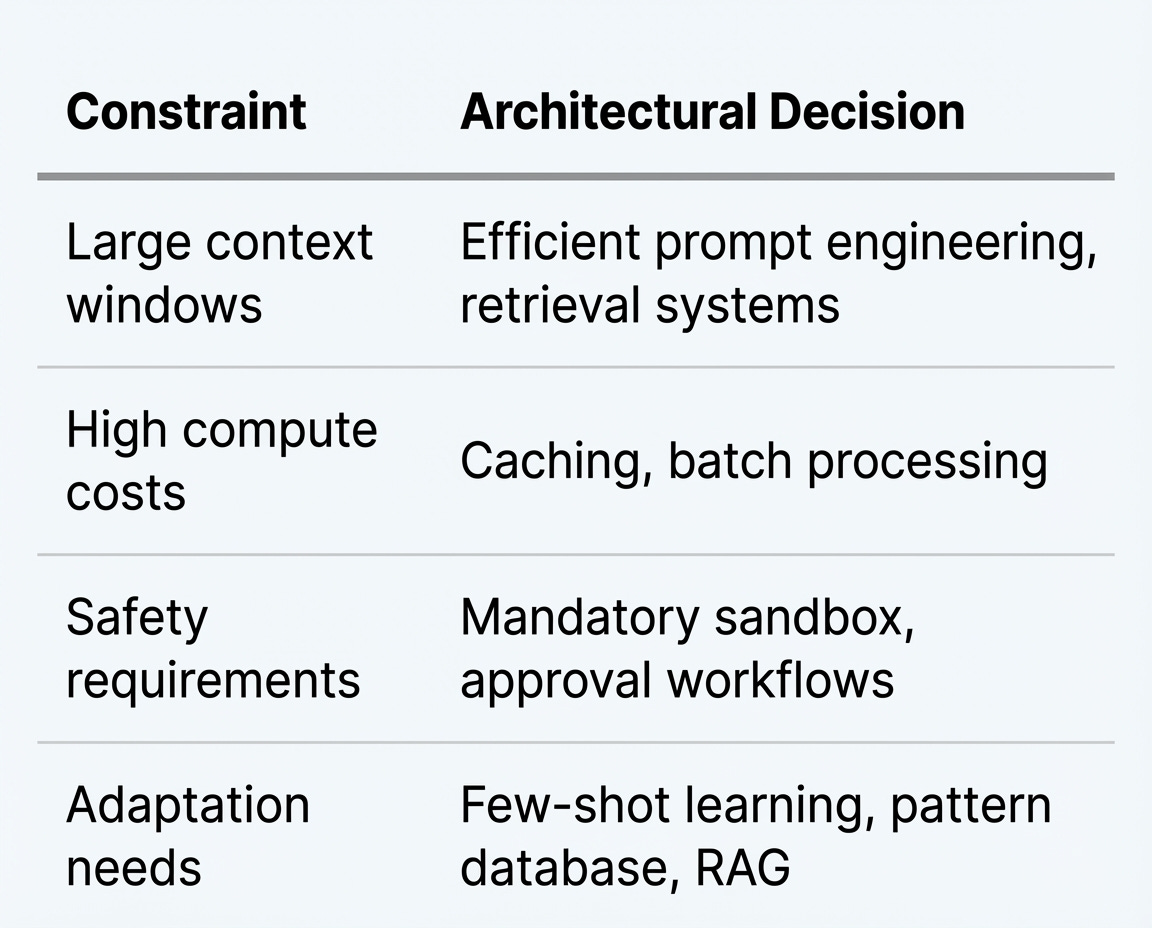

Step 3: Engineering Constraints - Technical Boundaries

Model Performance Requirements

Safety & Reliability

Computational Resources

Model Inference: Large context windows (32K-128K tokens)

Cost: $0.10-$1.00 per task (LLM API costs)

Memory: Vector database for pattern storage

How These Constraints Shape Architecture:

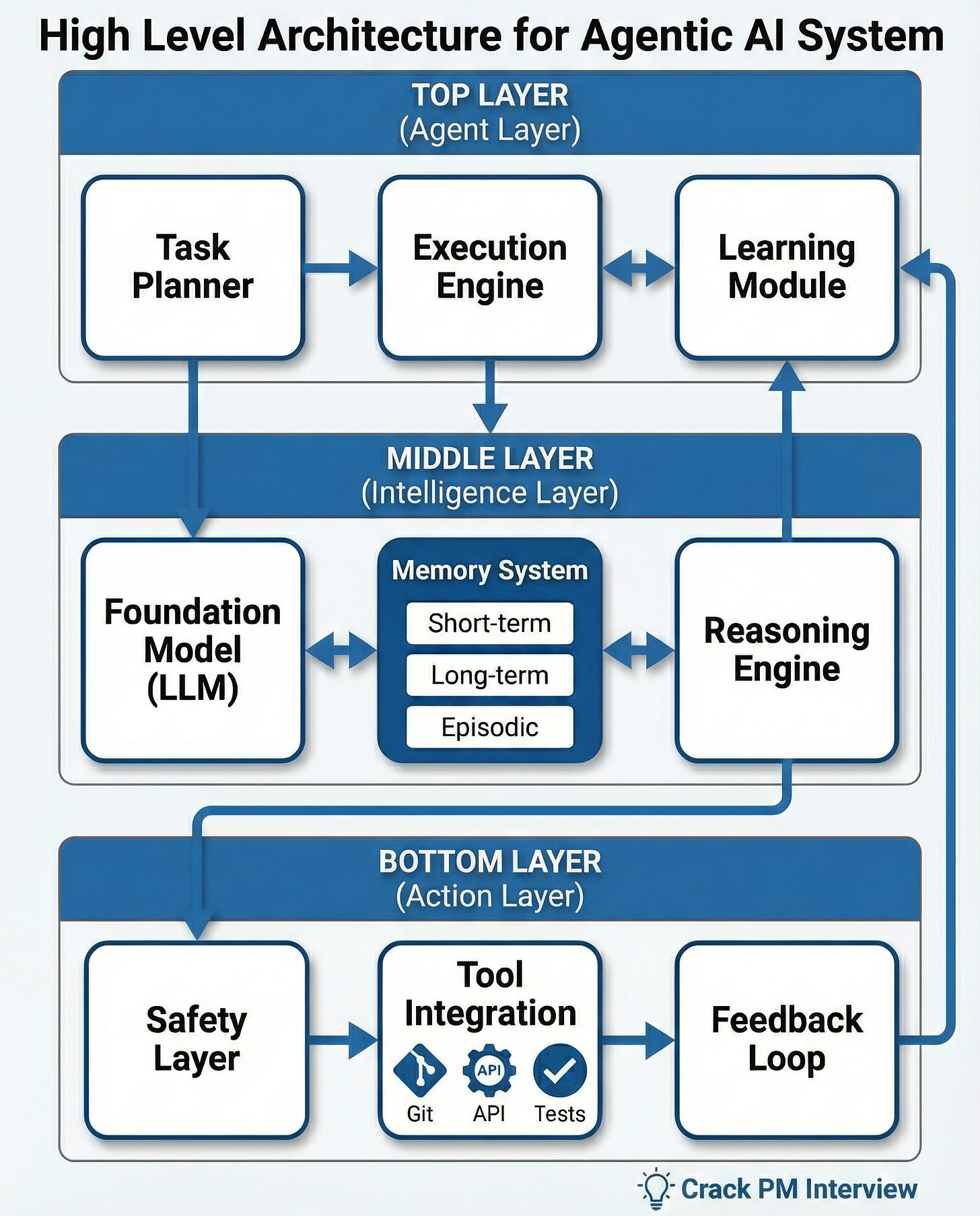

Step 4: Components - High-Level Architecture

Goal: Map out major system components and how they interact without getting lost in implementation details.

The 9 Core Components

I see nine main components organized into three layers:

Agent Layer (Decision & Orchestration):

Task Planner - Decomposes tasks, creates execution plans

Execution Engine - Orchestrates subtask execution

Learning Module - Adapts from feedback and examples

Intelligence Layer (Reasoning & Memory):

Foundation Model - Large language model (GPT-4, Claude)

Memory System - Stores context, patterns, history

Reasoning Engine - Decision-making, confidence scoring

Action Layer (Execution & Safety):

Tool Integration - Connects to Git, APIs, test runners

Safety Layer - Validates actions, prevents harm

Feedback Loop - Collects results, enables learning

Component Responsibilities

Keep reading with a 7-day free trial

Subscribe to Crack PM Interview to keep reading this post and get 7 days of free access to the full post archives.